TL;DR

Don’t bother.

Hardware Selection

I thought I would try my hand at mining crypto in the cloud. After all, who wouldn’t want to be a bitcoin billionaire?

There are a few VM options in Azure which have GPUs installed, I tried

- Standard NV6 (6 vcpus, 56 GiB memory) - £783.47 /month

- Standard NC6 (6 vcpus, 56 GiB memory) - £365.62 /month (promotion)

It turns out the NC6 is an older model and uses the NVIDIA Tesla K80, and many of the crypto algorithms no longer support it. I pulled out all the stops and went for the NV6 at £783.47 a month.

The NV6 has an NVIDIA Tesla M60 graphics card installed (pictured above), which the algorithms seemed quite happy with.

For anyone interested in the benchmarks:

DaggerHashimoto ETH - 2.63 MH/s

DaggerHashimoto EXP - 9.01 MH/s

RandomX (CPU) - 2305.57 H/s

RandomX - 284.32 H/s

KawPow - 3.78 MH/s

BeamHashIII - 6.90 H/s

Ubqhash - 17.80 MH/sOS Selection

I went with Windows 10 Preview. I didn’t want any of that Windows Server security lockdown getting in the way of my money making hackery.

Software Installation

- Install the NVIDIA driver, from the NVIDIA website.

- I went for the Kryptex Miner. It was extremely easy to use. It mines whatever crypto currency is the most profitable for you at that point in time (I think). I installed it, logged in, and ran the benchmarks to start it off.

- Profit.

Revenue

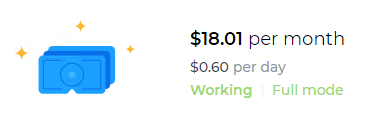

The next step was to sit back and what the money roll in. Conveniently the software provides you with an idea of your expected earnings.

Oh dear.

That’s £13.00 /month.

We’re losing £770.47 /month, or a -5926% profit margin.

I ran the machine for over 10 days, and the numbers are fairly accurate.

Energy

Microsoft is a carbon neutral company but we’re still burning unnecessary energy.

The Kryptex software reports that the GPU uses 100W or power, I can’t get a precise figure for the CPU consumption, so let’s say that’s around the same, totalling 200W.

The PUE (Power Usage Effectiveness) for Azure, which is a measure of the energy efficiency of the data centre, is 1.125. So multiplying 200W by 1.125 gives us 225W of energy required to power the server.

That’s 162 kWh /month.

The average cost of electricity in the UK in 2020 was 17.2p/kWh.

That’s an electricity bill of £27.86 /month.

I’m sure Microsoft pay less for their electricity, but it looks like mining can’t even pay for it’s own power, never mind the cost of the hardware. The GPU alone costs around £1000.

How do the pros do it?

Crypto is only profitably mined in countries with low power costs using custom hardware.

Interestingly, Microsoft have experimented with ASIC hardware, to improve compression performance in their storage systems. There’s no option to use these machines directly at the moment though.

Conclusion

It’s not financially viable to mine crypto in the cloud, don’t bother.